Three or four years ago I sat down to write a paper about how, even without any new privacy legislation, there was a lot that U.S. administrative agencies could do under existing powers to enhance personal privacy if they were so minded. As I started writing the introduction to that piece, I went looking for a citation for a proposition that I thought was obvious, and that many other people also take for granted: that often privacy enhances safety. To my amazement, I couldn’t find a clear statement of the proposition anywhere, although there were plenty of people willing to explain why they thought anonymity made people unsafe, as it let the bad guys toil in the darkness. Which is undoubtedly true sometimes, but far from the whole story.

Three or four years ago I sat down to write a paper about how, even without any new privacy legislation, there was a lot that U.S. administrative agencies could do under existing powers to enhance personal privacy if they were so minded. As I started writing the introduction to that piece, I went looking for a citation for a proposition that I thought was obvious, and that many other people also take for granted: that often privacy enhances safety. To my amazement, I couldn’t find a clear statement of the proposition anywhere, although there were plenty of people willing to explain why they thought anonymity made people unsafe, as it let the bad guys toil in the darkness. Which is undoubtedly true sometimes, but far from the whole story.

So, back then, instead of writing the paper I wanted to write, I wrote what I thought of as the prequel, which became Privacy as Safety, 95 Wash. L. Rev. 141 (2020), (with Zak Colangelo).

Now, finally, I, along with co-authors Phillip Arencibia and P. Zak Colangelo-Trenner, have a draft of the paper I originally wanted to write. We’ve just put it up on SSRN as Safety as Privacy.

Here’s the abstract:

New technologies, such as internet-connected home devices we have come to call ‘the Internet of Things (IoT)’, connected cars, sensors, drones, internet-connected medical devices, and workplace monitoring of every sort, create privacy gaps that can cause danger to people. In Privacy as Safety, 95 Wash. L. Rev. 141 (2020), two of us sought to emphasize the deep connection between privacy and safety, in order to lay a foundation for arguing that U.S. administrative agencies with a safety mission can and should make privacy protection one of their goals. This article builds on that foundation with a detailed look at the safety missions of several agencies. In each case, we argue that the agency has the discretion, if not necessarily the duty, to demand enhanced privacy practices from those within its jurisdiction, and that the agency should make use of that discretion.

This is the first article in the legal literature to identify the substantial gains to personal privacy that several U.S. agencies tasked with protecting safety could achieve under their existing statutory authority. Examples of agencies with untapped potential include the Federal Trade Commission (FTC), the Consumer Product Safety Commission (CPSC), the Food and Drug Administration (FDA), the National Highway Traffic Safety Administration (NHTSA), the Federal Aviation Administration (FAA), and the Occupational Safety and Health Administration (OSHA). Five of these agencies have an explicit duty to protect the public against threats to safety (or against risk of injury) and thus – as we have argued previously – should protect the public’s privacy when the absence of privacy can create a danger. The FTC’s general authority to fight unfair practices in commerce enables it to regulate commercial practices threatening consumer privacy. The FAA’s duty to ensure air safety could extend beyond airworthiness to regulating spying via drones. The CPSC’s authority to protect against unsafe products authorizes it to regulate products putting consumers’ physical and financial privacy at risk, thus sweeping in many products associated with the IoT. NHTSA’s authority to regulate dangerous practices on the road encompasses authority to require smart car manufacturers include precautions protecting drivers from misuses of connected car data due to the car-maker’s intention and due to security lapses caused by its inattention. Lastly, OSHA’s authority to require safe work environments encompasses protecting workers from privacy risks that threaten their physical and financial safety on the job.

Arguably an omnibus, federal statute regulating data privacy would be preferable to doubling down on the U.S.’s notoriously sectoral approach to privacy regulation. Here, however, we say only that until the political stars align for some future omnibus proposal, there is value in exploring methods that are within our current means. It may be only second best, but it is also much easier to implement. Thus, we offer reasonable legal constructions of certain extant federal statutes that would justify more extensive privacy regulation in the name of providing enhanced safety, a regime that would we argue would be a substantial improvement over the status quo yet not require any new legislation, just a better understanding of certain agencies’ current powers and authorities. Agencies with suitably capacious safety missions should take the opportunity to regulate to protect relevant personal privacy without delay.

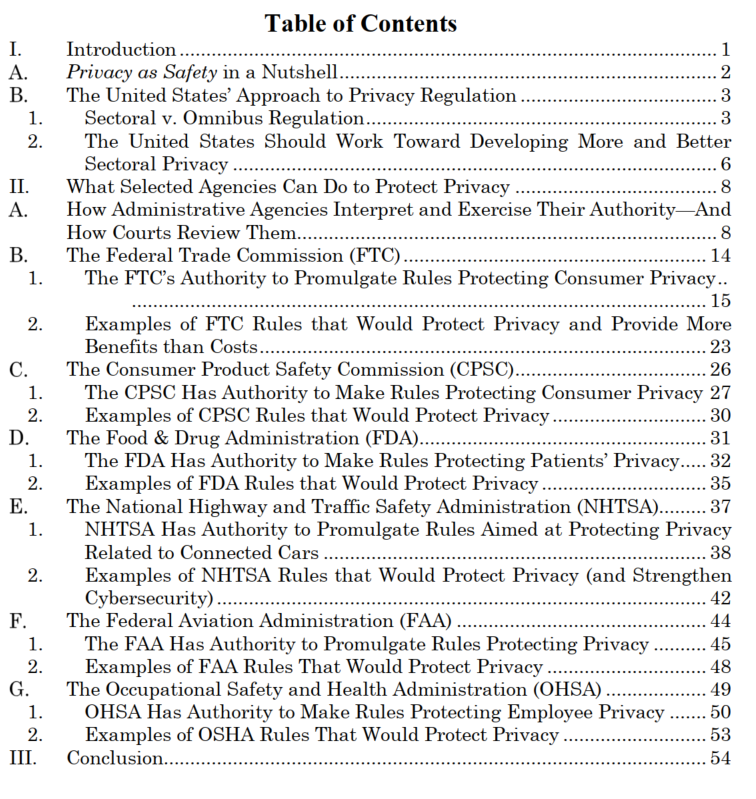

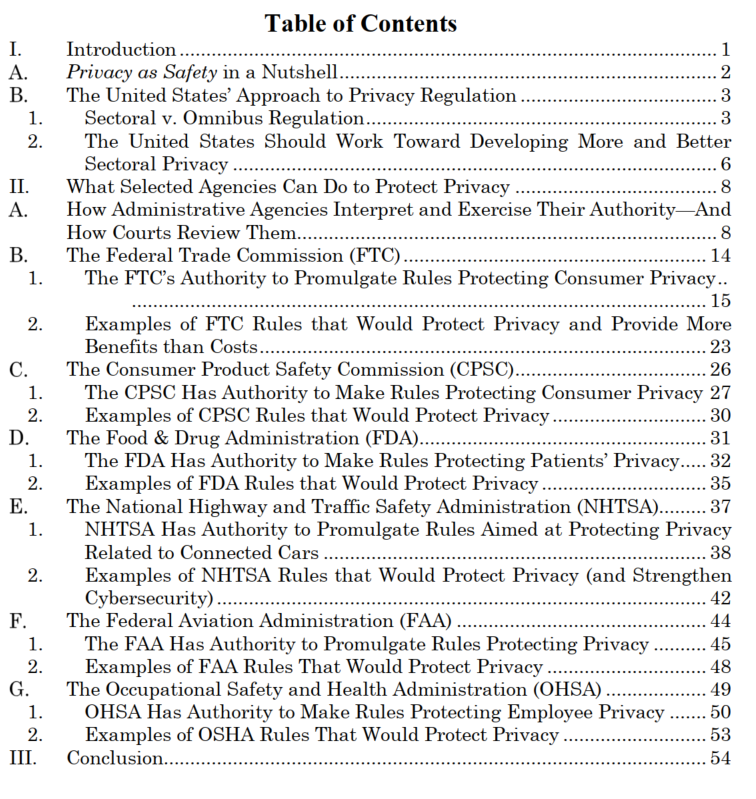

And here’s the table of contents:

It’s just a draft so comments (and offers to publish in a law review) are welcome!

The

The